Can Event management help foster a

curiosity for innovative possibilities to make application performance better? Blue-sky thinkers may not want to deal with

the myriad of details on how to manage the events being generated

operationally, but could learn something from this exercise.

Consider the major system

failures in your organization over the last 12 to 18 months. What if you had a system or process in place

to capture those failures and mitigate them from a proactive standpoint preventing

them from reoccurring? How much better

off would you be if you could avoid the proverbial "Groundhog Day” with system outages?

The argument that system monitoring is

just a nice to have, and not really a

core requirement for operational readiness, dissipates quickly when a critical

application goes down with no warning.

Starting with the Event

management and Incident management processes may seem like a reactive approach

when implementing an Application Performance Management (APM) solution, but is

it really? If "Rome is burning”,

wouldn’t the most prudent action be to extinguish the fire, then come up with a

proactive approach for prevention?

Managing the operational noise can calm the environment allowing you to

focus on APM strategy more effectively.

Asking the

right questions during a post-mortem review will help generate dialog,

outlining options for alerting and prevention.

This will direct your thinking towards a new horizon of continual

improvement that will help galvanize proactive monitoring as an operational

requirement. Here are three questions that

build on each other as you work to mature your solution:

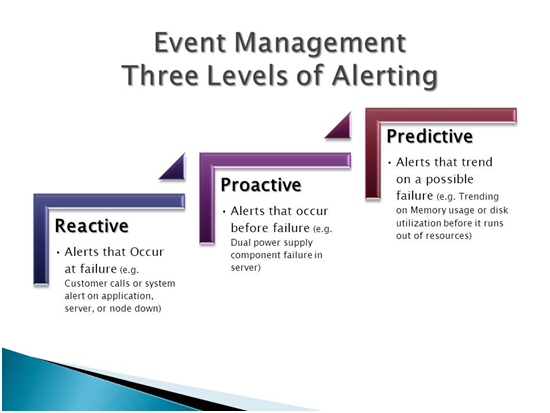

1.

Did we alert on

it when it went down, or did the user community call us?

2.

Can we get a

proactive alert on it before it goes down, (e.g. dual power supply failure in

server)?

3.

Can we trend on

the event creating a predictive alert before it is escalated, (e.g. disk space utilization

to trigger a minor@90%, major@95%, critical@98%)?

The

preceding questions are directly related to the following categories respectively:

Reactive, Proactive, and Predictive.

Reactive – Alerts that occur at

failure

Multiple events can occur before a system failure; eventually an alert

will come in notifying you that an application is down. This will come from either the users calling

the Service Desk to report an issue or it will be system generated corresponding

with an application failure.

Proactive – Alerts that occur before

failure

These alerts will most likely come from proactive monitoring to tell you

there are component failures that need attention but have not yet affected

overall application availability, (e.g. dual power supply failure in server).

Predictive – Alerts that trend on a

possible failure

These alerts are usually set up in parallel with trending reports that

will help predict subtle changes in the environment, (e.g. trending on memory

usage or disk utilization before running out of resources).

Conclusion

Once you build awareness

in the organization that you have a bird’s eye view of the technical landscape and

have the ability to monitor the ecosystem of each application (as an ecologist),

people become more meticulous when introducing new elements into the

environment. They know that you are

watching, taking samples, and trending on the overall health and stability

leaving you free to focus on the strategic side of APM without

distraction.

Related Links:

For a high-level view of a

much broader technology space refer to slide show on BrightTALK.com which

describes "The Anatomy of APM-

Webcast” in more context.

For more information on the critical success factors in APM

adoption and how this centers around the End-User-Experience (EUE), read The Anatomy of APM and the corresponding blog APM’s DNA – Event to

Incident Flow.

Prioritizing Gartner's APM Model

APM and MoM – Symbiotic Solution Sets